Picture | |Bohdan Kit

Quadrant originality

Wen Yi Luo Ji

Programming heart

The native application of AI is "difficult to produce".

After the Hundred Models War, a group of exhausted entrepreneurs gradually reacted: the real opportunity for China lies in the application layer, and the native application of AI is the most fertile soil for the next round.

▲ The picture comes from the Internet.

Robin Li, Wang Xiaochuan, Zhou Hongyi and Fu Sheng, taking stock of the speeches made by big bosses in the past few months, all emphasized the great opportunities of the application layer.

Internet giants talk about AI nativity: Baidu released more than 20 AI native applications in one breath; ByteDance set up a new team, focusing on the application layer; Tencent embedded the big model into the small program; Ali also wants to do all the applications again with Tongyi Qianwen; Wps crazy gift AI experience card ..

Startups are even more fanatical. After a hackathon, there are nearly 200 AI native projects. Since the beginning of this year, there have been dozens of events and thousands of projects, including Wonderland, Baidu and Founder Park, but none of them came out.

We have to face up to the fact that although we are aware of the great opportunities of the application layer, the big model has not subverted all applications, and all products are being transformed innocently. Although China has the best product managers, they are "out of order" this time.

From the explosion of Midjourney in April to the present, in nine months, the domestic AI native application of "Hope of the whole village" has been brought together. Why is it difficult to give birth?

Choice is more important than hard work. At present, perhaps we need to look back calmly and find the "posture" to correctly open the AI ? ? native application.

First, do AI native, not end to end

Why is the native application difficult to produce? We may find some answers from the "production" process of native applications.

"We usually run four or five models at the same time, which one has better performance." A big model entrepreneur in Silicon Valley mentioned when communicating with Self Quadrant that they developed AI applications based on basic big models, but in the early stage, they didn’t bind a big model, but let each model run up and down, and finally chose the most suitable one.

Simply put, the horse racing mechanism is now involved in the big model.

However, there are still some disadvantages in this way, because although it chooses different large models to try, it will eventually be deeply coupled with one of them, which is still an "end-to-end" research and development idea, that is, one application corresponds to one large model.

However, unlike applications, as a large model at the bottom, it corresponds to multiple applications at the same time, which leads to very limited differences between different applications in the same scene. The bigger problem is that at present, all the basic large models on the market have their own advantages and disadvantages, and no large model has become a hexagonal warrior, which is far ahead in all fields, so it is difficult for applications developed based on a large model to achieve balance in various functions.

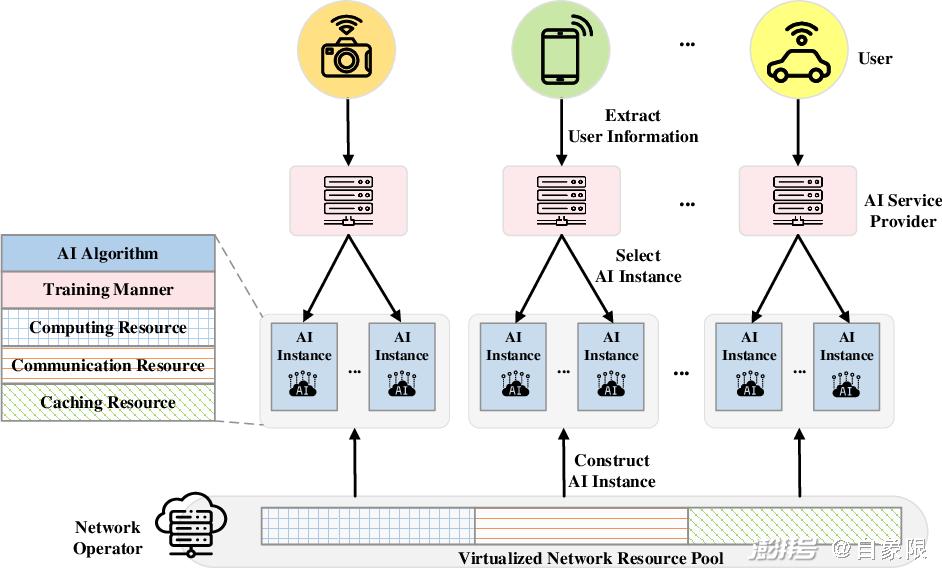

In this context, the decoupling of large model and application has become a new idea.

The so-called "decoupling" is actually divided into two links.

The first is decoupling the large model from the application. As the underlying driving force of AI native application, the relationship between big model and native application can actually be compared with the automobile industry.

▲ The picture comes from the Internet.

For AI native applications, the big model is like the engine of a car. The same engine can adapt to different models, and the same model can also match different engines. Through different training, different positioning from mini-cars to luxury cars can be realized.

Therefore, for the whole vehicle, the engine is only a part of the overall configuration and cannot be the core of defining the whole vehicle.

Analogy to AI native application, the basic big model is the key to drive the application, but the basic big model should not be completely bound with the application implementation. A big model can drive different applications, and the same application should also be driven by different big models.

In fact, such examples have been reflected in the current cases. For example, domestic flying books, nails and foreign Slack can all adapt to different basic models, and users can choose according to their own needs.

Secondly, in the specific application, the large model and different application links should be decoupled layer by layer.

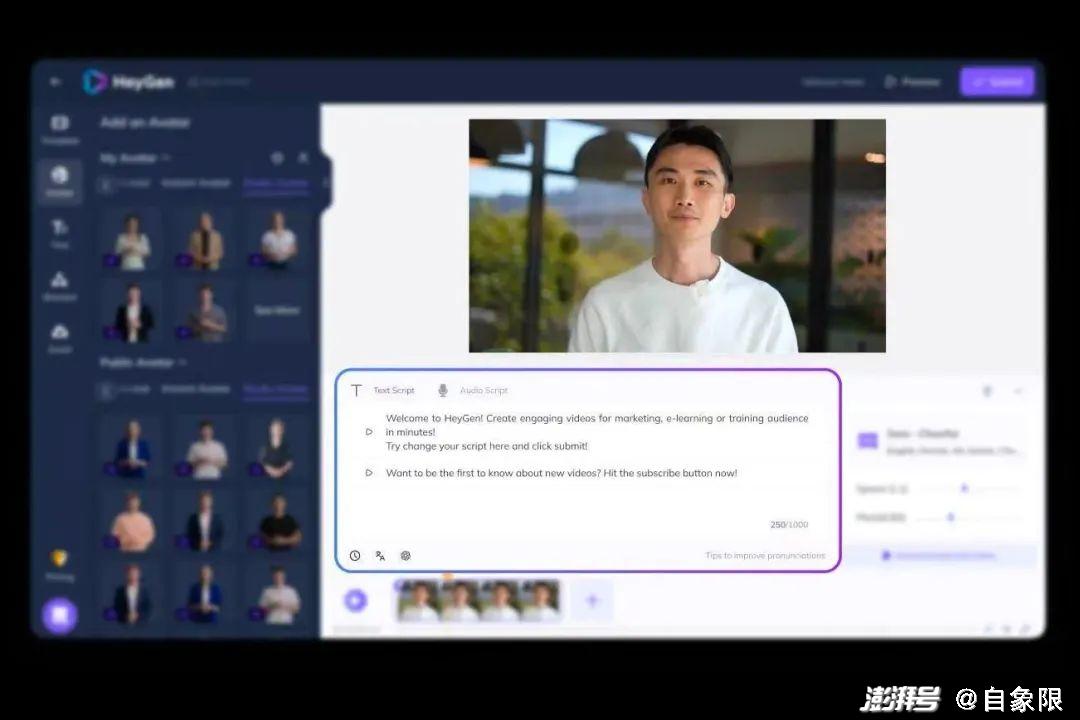

A typical example is HeyGen, an AI video company that exploded abroad. Its annual recurring revenue reached $1 million in March this year and $18 million in November this year.

HeyGen currently has 25 employees, but it has established its own video AI model, and integrated the large language models of OpenAI and Anthropic and the audio products of Eleven Labs. Based on different big models, HeyGen will use different models in different links such as creation, script generation (text) and sound when making a video.

▲ Source HeyGen official website

Another more direct case is the plug-in ecology of ChatGPT. Recently, the domestic clipping application Clipping has joined the ecological pool of ChatGPT. After that, users request to call the clipped plug-in on ChatGPT to make videos, and Clipping can automatically generate a video driven by ChatGPT.

That is to say, the many-to-many matching between large model and application can be detailed enough to select the most suitable large model to support in each link. That is, an application is not driven by a large model, but by several or even a group of large models.

Many large models correspond to one application, which is the best of a hundred. In this mode, the division of labor in the AI industry chain will also be redefined.

Just like the current automobile industry chain, every link of the engine, battery, accessories and fuselage has its own specialized manufacturers, and the OEM only needs to select and assemble them to form differentiated products and push them to the market at the same time.

Re-division of labor, breaking the reorganization, not breaking and not standing.

Second, the embryonic form of the new ecology

Under the multi-model and multi-application mode, a new ecology will be born.

According to the map, we try to imagine the architecture of a new ecology based on the experience of the Internet.

At the beginning of the birth of applets, everyone was very confused about the capabilities, architecture and application scenarios of applets. In the early stage, the development speed of applets was very slow, and the number of applets could not advance by leaps and bounds.

Until the emergence of WeChat service providers, service providers connected with WeChat ecosystem, were familiar with the underlying architecture and pattern of small programs, and connected with corporate customers to help customers create exclusive small programs according to their needs. At the same time, they cooperated with the whole WeChat ecosystem to acquire customers and retain them through small programs. The service provider group also ran out of the micro-alliance and praised.

In other words, the market may not need a big model, but it needs a big model service provider.

By the same token, each big model needs to be really used and traded before it can really understand the relevant characteristics and how to play it. In the middle layer, the service provider can not only create a number of big models in backwards compatibility, but also create a benign ecology with enterprises.

According to past experience, we can roughly divide service providers into three categories:

The first type of experienced service providers, that is, to understand and master the characteristics and application scenarios of each big model, cooperate with the industry segmentation scenarios, and open up the situation through the service team;

The second type of resource-based service providers, like the business model that Weimeng was able to get the low-priced advertising space in WeChat and then outsource it, the opening authority of the big model in the future is not universal, and the service providers who can get enough authority will cast early barriers;

The third type of technical service providers, when the bottom layer of an application is embedded with different large models at the same time, how to call and connect multiple models in series, while ensuring stability and security, as well as various technical problems need to be solved by technical service providers.

According to the observation of "self-quadrant", the prototype of large model service providers has begun to appear in the past six months, but in the form of enterprise services, they teach enterprises how to apply various large models. And the way to do application is also slowly forming WorkFlow.

"I’m going to make a video now. I’ll first put forward the idea of a script with Claud to help me write a story, then copy and paste it into ChatGPT, use its logical ability to decompose it into a script, access the clipping plug-in and turn it into a video to directly generate a video. If some pictures in the middle are not accurate, use Midjourney to regenerate them and finally complete a video. If an application can call these capabilities at the same time, it is a truly native application. " An entrepreneur told us.

Of course, there are many problems to be solved in the real implementation of multi-model and multi-application ecology, such as how to communicate among multiple models? How to maximize model calls through algorithms? How to cooperate is the best solution, which is both a challenge and an opportunity.

Judging from the past experience, the development trend of AI applications may be scattered and dotted, and then gradually unified and integrated.

For example, we need to ask questions, make pictures and do PPT. At present, there may be many separate applications, but they may be integrated into a whole product in the future. Move closer to the platform. For example, the previous taxi, take-away, booking and other formats are gradually concentrated into a super APP, and different needs will also pose further diversified challenges to the model’s ability.

In addition, AI Native will subvert the current business model, the hot money in the industrial chain will be redistributed, Baidu will become the shelf of knowledge, Ali will become the shelf of goods, and all business models will return to the most essential part to meet the real needs of consumers, and redundant processes will be replaced.

On this basis, value creation is on the one hand, and how to rebuild the business model has become a more important issue for investors and entrepreneurs to think about.

At present, we are still on the eve of the outbreak of the original application of AI. When it is gradually formed, the bottom layer is the basic big model, the middle layer is the big model service provider, and the upper layer is various startup companies. With such a clear division of labor and benign cooperation, AI native applications can come in batches.

The map in this paper comes from the network.

If you want to break the news, contribute, reprint, cooperate and communicate.

↓ Welcome to add a temporary secretary ↓

Micro signal: yanzu0303

关于作者